On January 27, Kimi, a leading AI technology company and a Sky9 Capital portfolio company, announced the open-source release of its Kimi K2.5, the most powerful open-source model to date.

Kimi K2.5 builds on Kimi K2 with continued pretraining over approximately 15T mixed visual and text tokens. Built as a native multimodal model, K2.5 delivers state-of-the-art coding and vision capabilities and a self-directed agent swarm paradigm.

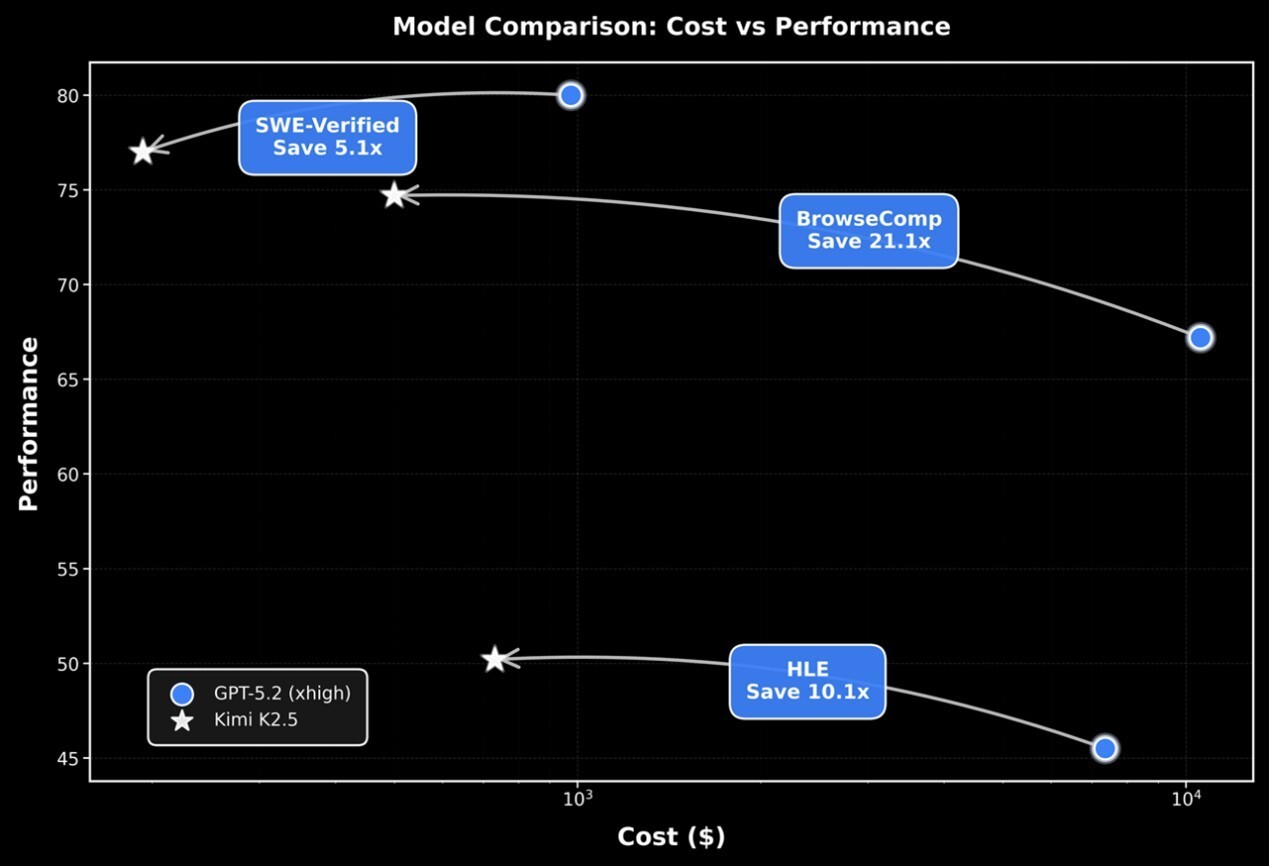

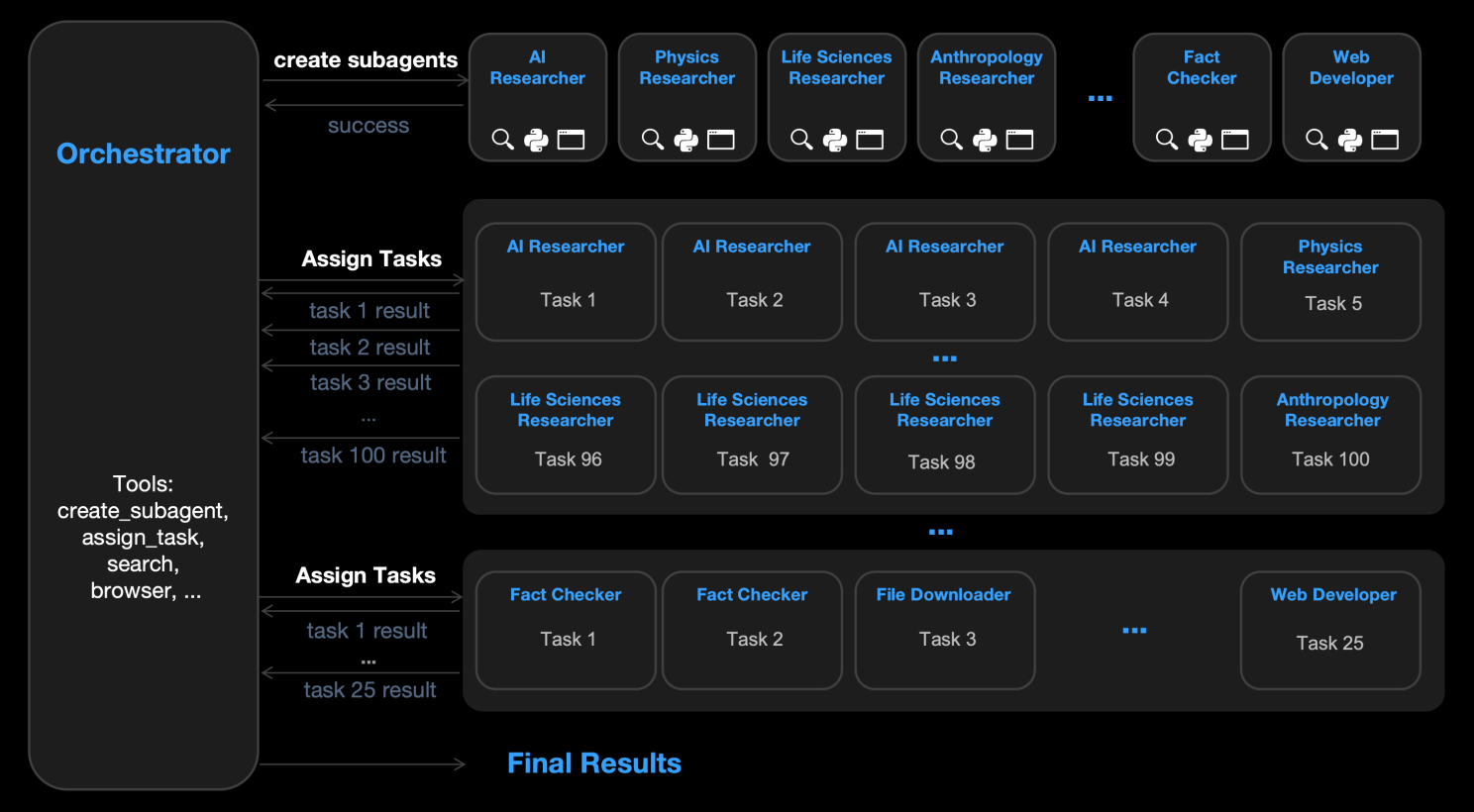

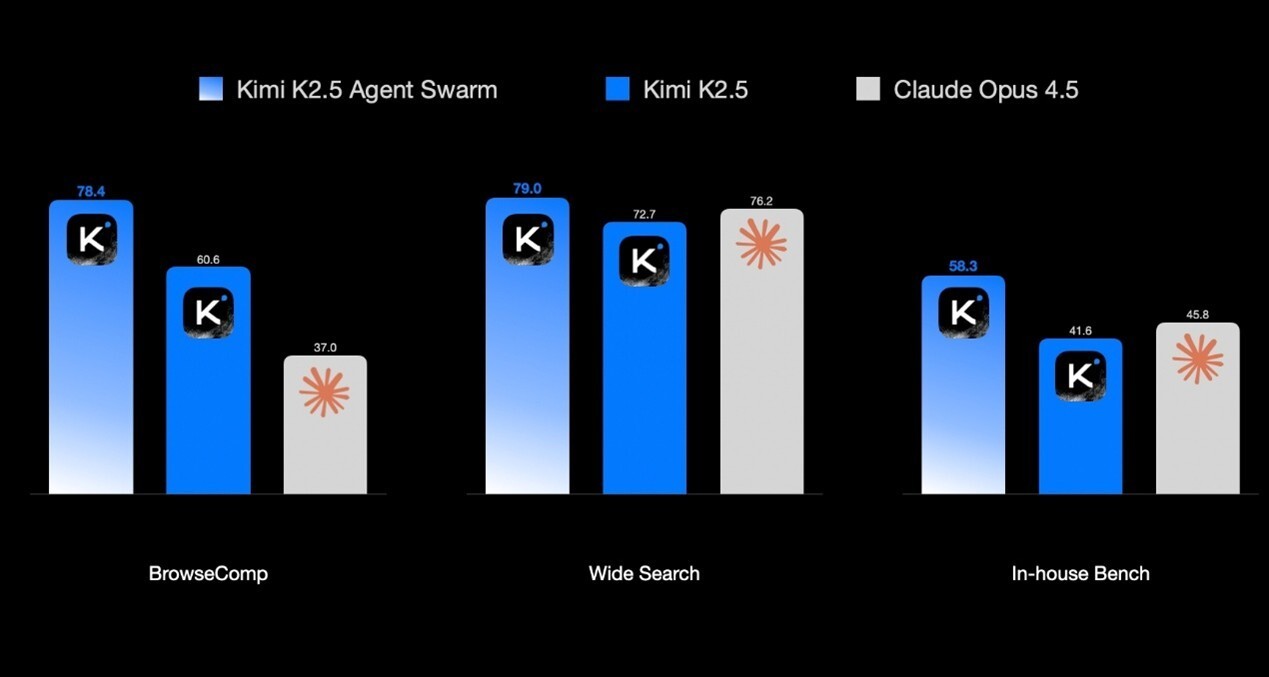

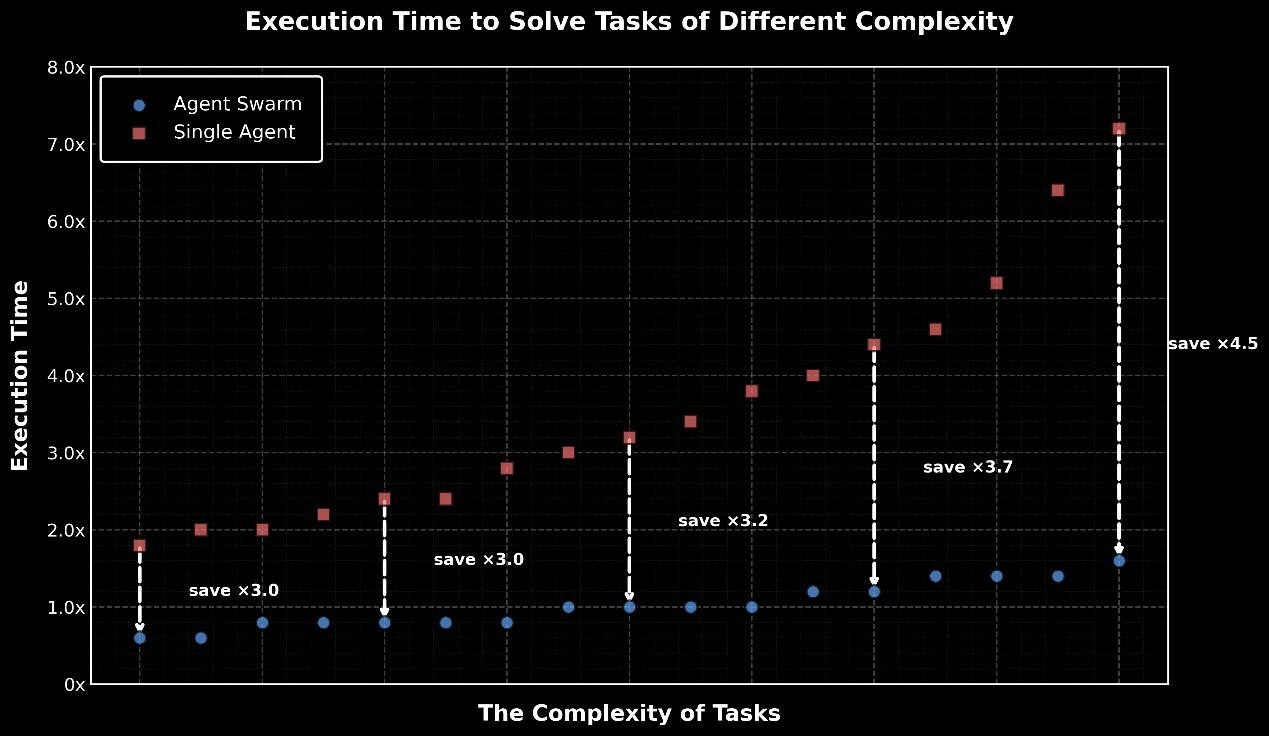

For complex tasks, Kimi K2.5 can self-direct an agent swarm with up to 100 sub-agents, executing parallel workflows across up to 1,500 tool calls. Compared with a single-agent setup, this reduces execution time by up to 4.5x. The agent swarm is automatically created and orchestrated by Kimi K2.5 without any predefined subagents or workflow.

Coding with Vision

Kimi K2.5 is the strongest open-source model to date for coding, with particularly strong capabilities in front-end development

K2.5 can turn simple conversations into complete front-end interfaces, implementing interactive layouts and rich animations such as scroll-triggered effects. Below are examples generated by K2.5 from a single prompt with image-gen tool

Beyond text prompts, K2.5 excels at coding with vision. By reasoning over images and video, K2.5 improves image/video-to-code generation and visual debugging, lowering the barrier for users to express intent visually.

Here is an example of K2.5 reconstructing a website from video:

This capability stems from massive-scale vision-text joint pre-training. At scale, the trade-off between vision and text capabilities disappears — they improve in unison.

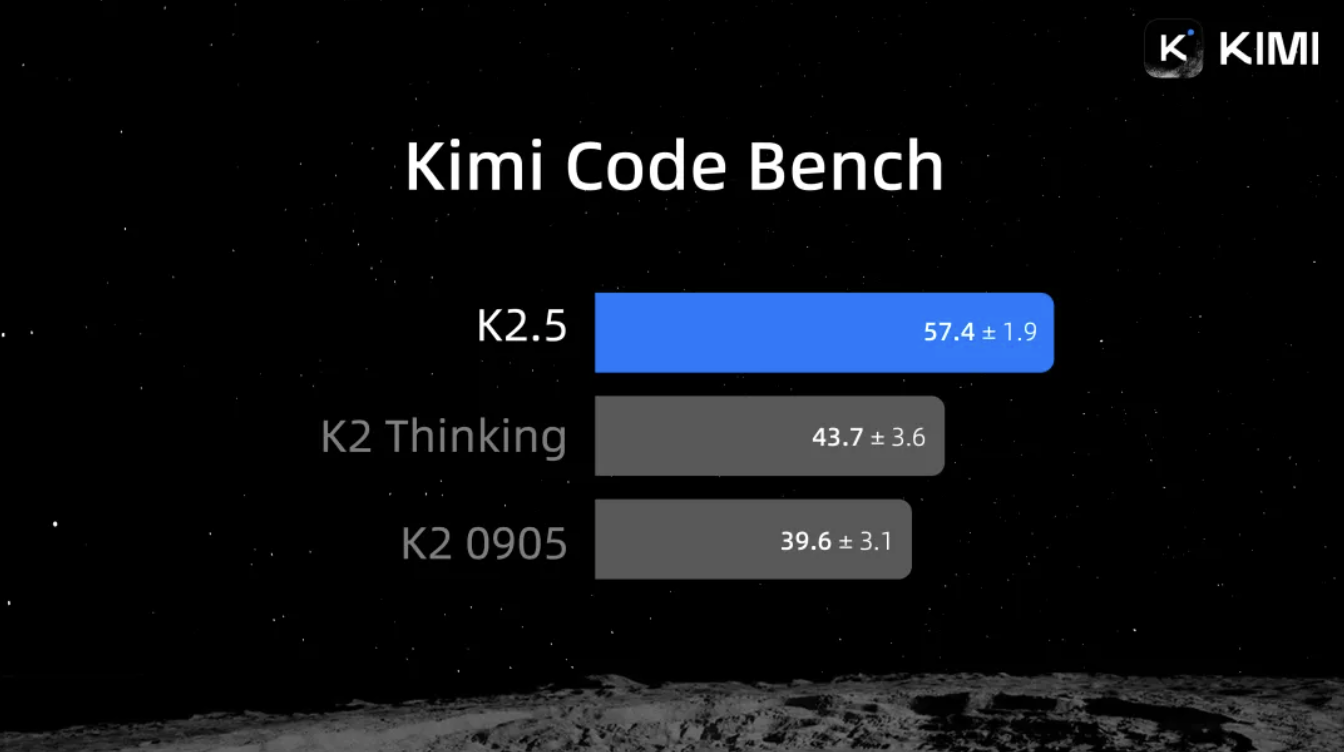

K2.5 excels in real-world software engineering tasks. Evaluated using Kimi Code Bench, Kimi’s internal coding benchmark covering diverse end-to-end tasks — from building to debugging, refactoring, testing, and scripting — across multiple programming languages, K2.5 shows consistent and meaningful improvements over K2 across task types.

To try out K2.5’s agentic coding capabilities, K2.5 Agent offers a set of preconfigured tools for immediate, hands-on experiences. For software engineering use cases, users can pair Kimi K2.5 with Kimi’s new coding product, Kimi Code.

Kimi Code works in user’s terminal and can be integrated with various IDEs including VSCode, Cursor, Zed, etc. Kimi Code is open-sourced and supports images and videos as inputs. It also automatically discovers and migrates existing skills and MCPs into your working environment in Kimi Code.

Here’s an example using Kimi Code to translate the aesthetic of Matisse’s La Danse into the Kimi App. This demo highlights a breakthrough in autonomous visual debugging. Using visual inputs and documentation lookup, K2.5 visually inspects its own output and iterates on it autonomously. It creates an art-inspired webpage end to end:

Agent Swarm

Kimi released K2.5 Agent Swarm as a research preview, marking a shift from single-agent scaling to self-directed, coordinated swarm-like execution.

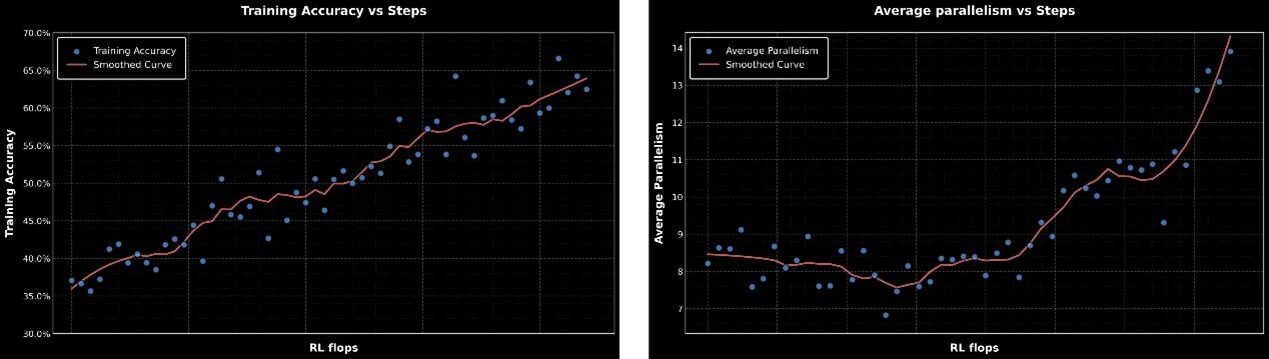

Trained with Parallel-Agent Reinforcement Learning (PARL), K2.5 learns to self-direct an agent swarm of up to 100 sub-agents, executing parallel workflows across up to 1,500 coordinated steps, without predefined roles or hand-crafted workflows.

K2.5 Agent Swarm improves performance on complex tasks through parallel, specialized execution. In an internal evaluations, it leads to an 80% reduction in end-to-end runtime while enabling more complex, long-horizon workloads, as shown below.

Office Productivity

Kimi K2.5 brings agentic intelligence into real-world knowledge work.

K2.5 Agent can handle high-density, large-scale office work end to end. It reasons over large, high-density inputs, coordinates multi-step tool use, and delivers expert-level outputs: documents, spreadsheets, PDFs, and slide decks—directly through conversation.

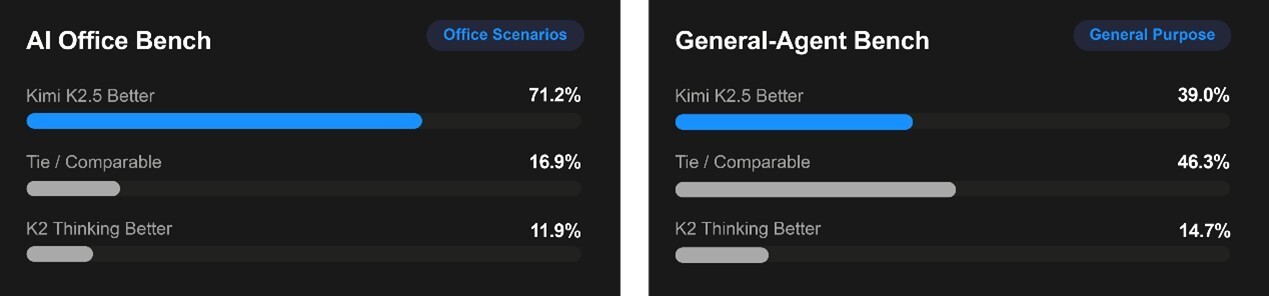

With a focus on real-world professional tasks, Kimi designed two internal expert productivity benchmarks. The AI Office Benchmark evaluates end-to-end Office output quality, while the General Agent Benchmark measures multi-step, production-grade workflows against human expert performance. Across both benchmarks, K2.5 shows 59.3% and 24.3% improvements over K2 Thinking, reflecting stronger end-to-end performance on real-world tasks.

K2.5 agent supports advanced tasks such as adding annotations in Word, constructing financial models with Pivot Tables, and writing LaTeX equations in PDFs, while scaling to long-form outputs like 10,000-word papers or 100-page documents.

Grounded in advances in coding with vision, agent swarms, and office productivity, Kimi K2.5 represents a meaningful step toward AGI for the open-source community, demonstrating strong capability on real-world tasks under real-world constraints. Looking ahead, we will push further into the frontier of agentic intelligence, redefining the boundaries of AI in knowledge work.